The power of fuzz, or, llms are a ux technology

LLMs let us fuzz between human and software language and we should take advantage of that more

Published Jul 5, 2024

Modifed Jul 17, 2024

17/7/2024 update: Rupert Parry’s Causal Islands 2023

I’ve been thinking about

Software can be designed to help people accomplish specific goals because the designers of that software understand the goals of people using it and embed that knowledge into the software itself. LLMs let software designers do this in ways that previously weren’t possible through their ability to fuzz.

Example: Translating Into Categories

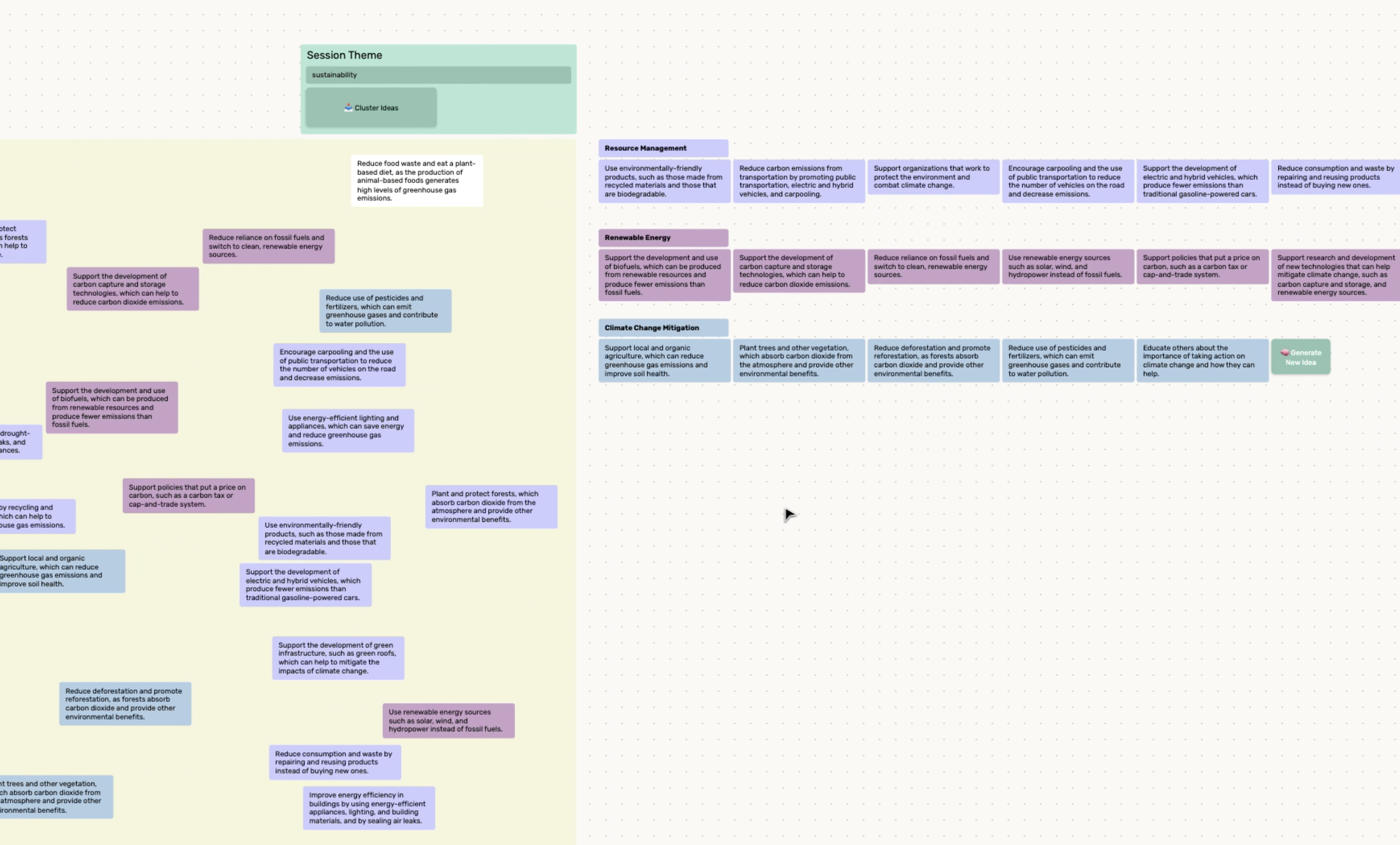

Here’s a

Something I could have done, instead of building this tool, is use ChatGPT and write a prompt asking it to categorize each idea. This approach might provide useful information, but it doesn’t take advantage of the fact that software is a dynamic medium, and instead just generates a new inert text artifact for me read and then probably forget or discard after it becomes outdated or I want to edit one of the ideas.

Instead of using ChatGPT, I built a tool that uses the exact same LLM, but situated within the software such that it can manipulate the software itself. It analyzes the group as a whole and comes up with a list of categories, and then physically places each individual review into one of the categories, color coding them as well.

This piece of software is already much more useful to me because instead of generating a static piece of text I have to read, it just modifies the already dynamic ideas similarly to how I can. It moves the ideas around just like I can, it changes their colors just like I can. I’m left with the same artifact I started with (text boxes) but transformed to be much more useful to me, and which I can continue to work with as I did before I asked for the AI to intervene.

ok…so?

This kind of thing is only possible because of an LLM’s ability to reach into the semantic content of text and translate it back into software language (in this example: the physical position and color of the ideas, as well as their labels), and is, I’m arguing, both their main strength as a tool in the toolbox of anyone designing software, and something we couldn’t really do before.

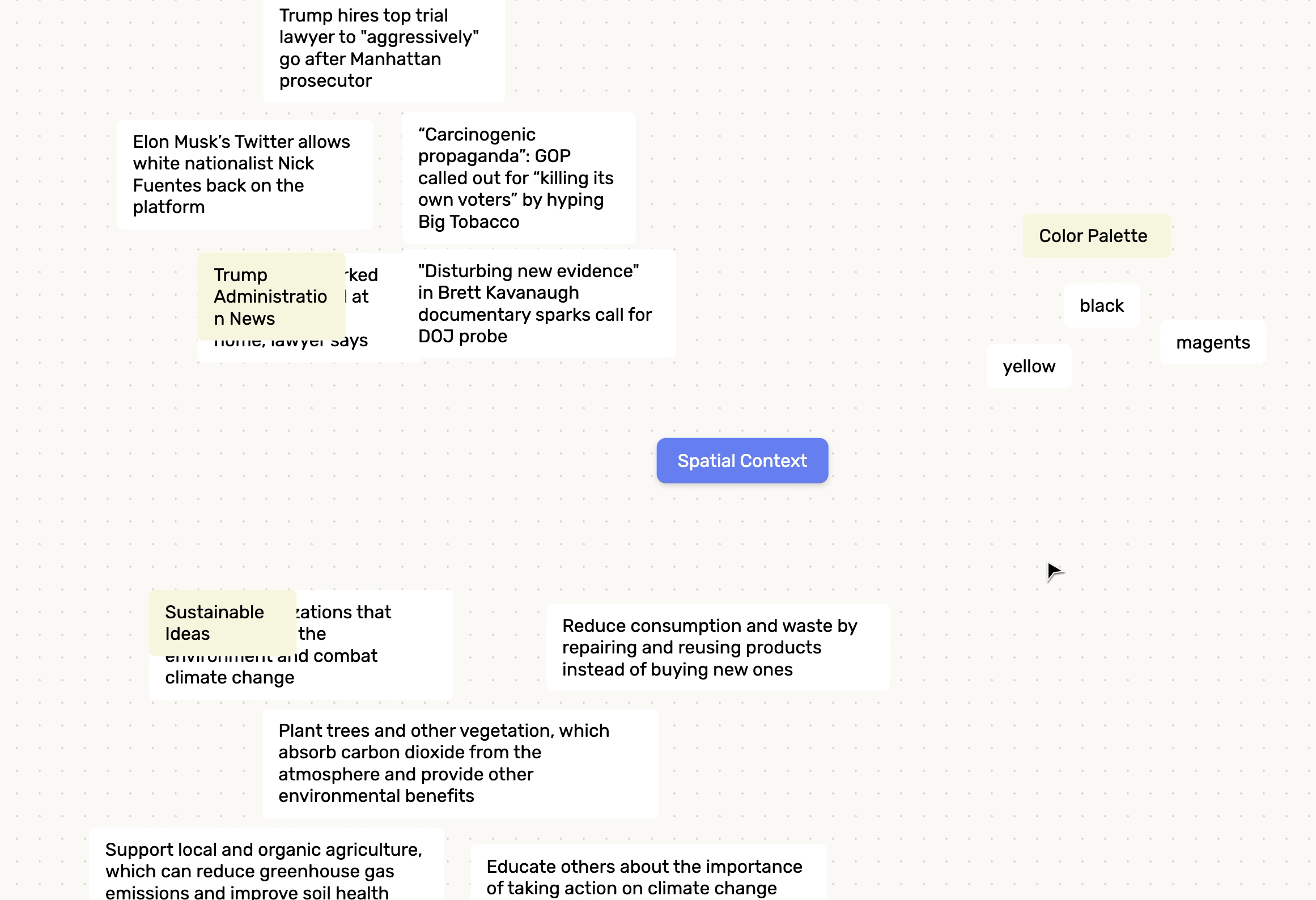

Another Example: Spatial Awareness

But! grouping things into categories is something we can do, just not as quickly as this software. However, there are some tasks that humans struggle with that can be done similarly way via an LLM.

Voilà! We’re speaking multiple languages now.

There is obvious utility to a system like this, and what I want to stress is that building something that does this was pretty much impossible in the pre-LLM times, specifically in how it translates the semantic content of the text into labels and, more importantly, their coordinates. (

This kind of thing was not even the most interesting UX research being done with LLMs when I posted it in May of 2023, BUT, a year later, I think that the power of what’s actually happening here has not yet been fully realized by people designing in the space (that’s you, intended reader!). We don’t know what we have our hands on! Taking human language and folding and squishing and fuzzing it into the language software speaks and back again unlocks a massive amount of design space, most of which I think is as of yet unexplored.

A last-minute example in the wild I liked

I recently relented and downloaded

This is a great example of the fuzz I’m talking about: translating human language (your bio) into software language (the little discussion prompts), and it’s exactly the kind of interaction we couldn’t build before LLMs.

Fuzz

In my head, I’ve been calling this semantic-software translation ability